Playing With LangChain And All Those Possibilities

While I was waiting for my ChatGPT Plugin waitlist application to be approved, I tried out LangChain, a trending open-source project. I’m not sure why my application took so long, as I submitted it on the day the ChatGPT Plugin was released. I discovered some thrilling and entertaining features in LangChain.

Agents are indeed powerful tools, and I must say that the idea behind LangChain is quite impressive. The gap between those who can utilize AI and those who cannot is bound to widen, with the latter eventually being phased out.

Agents: Dynamically call chains based on user input

Up until now, our chains have run in a simple predetermined order: prompt template -> user input -> LLM API call -> result.

Agents, on the other hand, use an LLM to determine which actions to take and in what order. These actions can include using an external tool, observing the result, or returning it to the user.

Agents are systems that use a language model to interact with other tools. These can be used to do more grounded question/answering, interact with APIs, or even take actions.

The potential of building personal assistants with agents seems quite limitless…as LangChain highlights:

These agents can be used to power the next generation of personal assistants – systems that intelligently understand what you mean, and then can take actions to help you accomplish your goal.

In order to make use of LangChain agents, a few concepts include:

Tool: This is a function that performs a specific duty, such as Google Search, Database lookup, Python REPL, and other chains.

Agent: The agent to use, which are a string that references a support agent class. There are a number of LangChain-supported agents and you can also build out your own custom agents.

You can find a full list of predefined tools here and a full list of supported agents here .

To better understand this, let’s look at two things that we know ChatGPT can’t do: search the internet after 2021 and (as of today) perform more complex mathematical operations.

https://www.mlq.ai/getting-started-with-langchain/

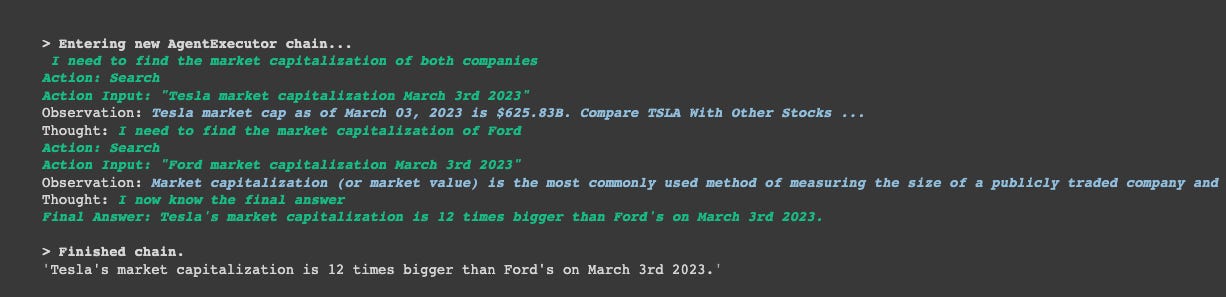

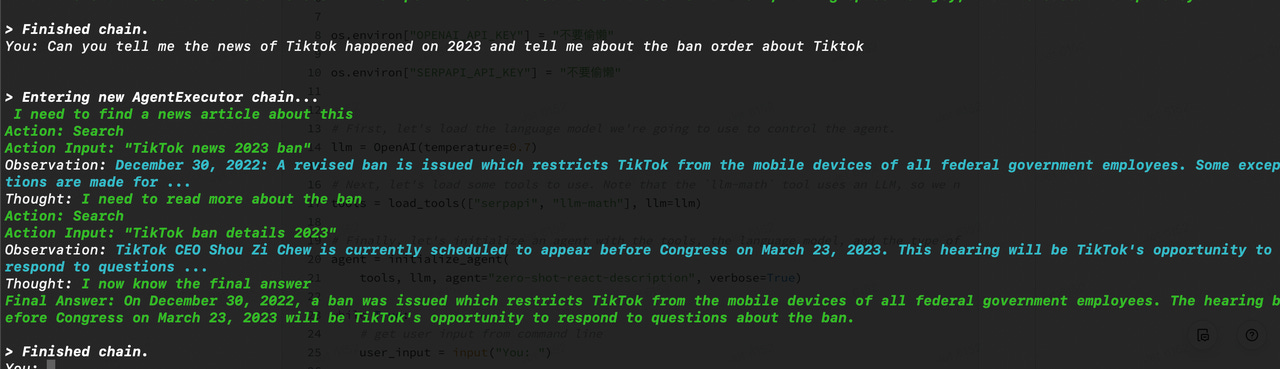

The following code snippet and model capabilities effectively address the issue of timeliness, and the results look promising. It even answers Tesla’s data for 2023, which isn’t included in GPT’s training set.

Here’s a sample of the toy program code.

import os

import langchain

from langchain.llms import OpenAI

from langchain.agents import initialize_agent

from langchain.agents import load_tools

os.environ["OPENAI_API_KEY"] = "***********************"

os.environ["SERPAPI_API_KEY"] = "***********************"

# First, let's load the language model we're going to use to control the agent.

llm = OpenAI(temperature=0.7)

# Next, let's load some tools to use. Note that the `llm-math` tool uses an LLM, so we need to pass that in.

tools = load_tools(["serpapi", "llm-math"], llm=llm)

# Finally, let's initialize an agent with the tools, the language model, and the type of agent we want to use.

agent = initialize_agent(

tools, llm, agent="zero-shot-react-description", verbose=True)

while True:

# get user input from command line

user_input = input("You: ")

# generate a response and print it

agent.run(user_input)

# exit the loop if the user says "bye"

if user_input.lower() == "bye":

breakThe next part is rather astonishing; it can perform multiple inferences and even search for information on its own!

💡

Let’s eagerly anticipate the kind of marvels ChatGPT Plugin will create and the magical powers it may possess.